-

본 글은 '모두를 위한 딥러닝 시즌 2'와 'pytorch로 시작하는 딥 러닝 입문'을 보며 공부한 내용을 정리한 글입니다.

필자의 의견이 섞여 들어가 부정확한 내용이 존재할 수 있습니다.

복잡한 모델들은 엄청난 양의 데이터가 필요하다!

⇒ 그런데 여러가지 이유(시간 등등) 때문에 한번에 학습하는게 불가능하다.

⇒ 그렇다면 일부의 데이터로만 학습한다면 빠르게 학습할 수 있지 않을까?

1. Mini Batch and batch size

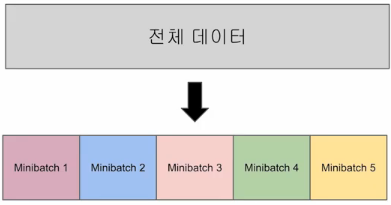

미니 배치 : 전체 데이터를 더 작은 단위로 나눠어 해당 단위로 학습하는 개념에서, 단위!

미니 배치 학습을 하게 된다면, 한 미니 배치만큼만 가져가서 학습을 수행한다, 그리고 그 다음 미니배치를 가져가서 학습을 수행하고....

이렇게 전체 데이터에 대한 학습이 1회 끝나면 1 Epoch가 끝나게 된다.

미니 배치의 크기를 배치 크기(batch size)라고 한다.

미니 배치 단위로 경사 하강법을 수행하는 방법을 '미니 배치 경사 하강법'이라고 하며, 일반 경사 하강법이 최적값에 수렴하느 과정이 매우 안정적이지만, 계산량이 많이 드는 반면 미니 배치 경사하강법은 훈련 속도가 빠르다.

배치 크기는 주로 2의 제곱수를 사용한다. (데이터 송수신의 효율을 높이기 위해)

전체 데이터를 배치 크기로 나눈 값을 이터레이션(Iteration)이라고 하는데, 이는 한번의 에포크 내에서 이뤄지는 매개변수 W와 b의 업데이트 횟수이다.(학습이 일어나는 횟수)

2. Data Load

pytorch에는 데이터를 다루기 쉽게 해주는 유용한 도구들이 많은데, 그 중 데이터셋(Dataset)과 데이터로더(DataLoader)를 알아보자.

이를 이용하면 미니 배치 학습, 데이터 셔플, 병렬 처리까지 간단히 수행할 수 있다.

기본적인 사용 방법은 Dataset을 정의하고, 이를 DataLoader에 전달하는 것이다.

import torch import torch.nn as nn import torch.nn.functional as F from torch.utils.data import TensorDataset # 텐서데이터셋 from torch.utils.data import DataLoader # 데이터로더 x_train = torch.FloatTensor([[73, 80, 75], [93, 88, 93], [89, 91, 90], [96, 98, 100], [73, 66, 70]]) y_train = torch.FloatTensor([[152], [185], [180], [196], [142]]) #dataset으로 저장 dataset = TensorDataset(x_train, y_train)dataset을 만들었다면 dataloader를 사용할 수 있다. 데이터 로더는 데이터 셋, 미니 배치의 크기를 기본 인자로 입력받는다. 추가적으로는 shuffle이 있는데, True를 선택하면 epoch마다 데이터 셋을 섞어준다. 순서에 익숙해 지는 것을 방지하기 위함이다.

#데이터 로더 설정 dataloader = DataLoader(dataset, batch_size=2, shuffle=True) model = nn.Linear(3,1) optimizer = torch.optim.SGD(model.parameters(), lr=1e-5) nb_epochs = 20 for epoch in range(nb_epochs + 1): for batch_idx, samples in enumerate(dataloader): #dataloader에서 데이터를 뺄때, x와 y가 묶여서 나온다. x_train, y_train = samples # H(x) 계산 prediction = model(x_train) # cost 계산 cost = F.mse_loss(prediction, y_train) # cost로 H(x) 계산 optimizer.zero_grad() cost.backward() optimizer.step() print('Epoch {:4d}/{} Batch {}/{} Cost: {:.6f}'.format( epoch, nb_epochs, batch_idx+1, len(dataloader), cost.item() ))3. Custom Dataset

torch.utils.data.Dataset을 상속받아 직접 커스텀 데이터셋을 만드는 경우도 있다.

torch.utils.data.Dataset은 파이토치에서 데이터셋을 제공하는 추상 클래스이다.

class CustomDataset(torch.utils.data.Dataset): def __init__(self): #데이터셋의 전처리를 해주는 부분 def __len__(self): #데이터셋의 길이. 즉, 총 샘플의 수를 적어주는 부분 def __getitem__(self, idx): #데이터셋에서 특정 1개의 샘플을 가져오는 함수import torch import torch.nn as nn import torch.nn.functional as F import torch.optim as optim from torch.utils.data import Dataset class CustomDataset(Dataset): #커스텀 데이터 셋 만들기 def __init__(self): self.x_data= [[73, 80, 75], [93, 88, 93], [89, 91, 90], [96, 98, 100], [73, 66, 70]] self.y_data = [[152], [185], [180], [196], [142]] def __len__(self): return len(self.x_data) def __getitem__(self, idx): x = torch.FloatTensor(self.x_data[idx]) y = torch.FloatTensor(self.y_data[idx]) return x, y dataset= CustomDataset() #객체 생성 from torch.utils.data import DataLoader dataloader = DataLoader( dataset, batch_size = 2, shuffle=True, #불러올 때 마다 데이터 섞기(순서외우기 못하게 하려고) ) #--------------------------------------------- class MultivariateLinearRegressionModel(nn.Module): def __init__(self): super().__init__() self.linear = nn.Linear(3, 1) def forward(self, x): return self.linear(x) # 데이터 x_train = torch.FloatTensor([[73, 80, 75], [93, 88, 93], [89, 91, 90], [96, 98, 100], [73, 66, 70]]) y_train = torch.FloatTensor([[152], [185], [180], [196], [142]]) # 모델 초기화 model = MultivariateLinearRegressionModel() # optimizer 설정 optimizer = optim.SGD(model.parameters(), lr=1e-5) nb_epochs = 20 for epoch in range(nb_epochs+1): for batch_idx, samples in enumerate(dataloader): x_train, y_train = samples # H(x) 계산 prediction = model(x_train) # cost 계산 cost = F.mse_loss(prediction, y_train) # cost로 H(x) 개선 optimizer.zero_grad() cost.backward() optimizer.step() # 20번마다 로그 출력 print('Epoch {:4d}/{} Batch {}/{} Cost: {:.6f}'.format( epoch, nb_epochs, batch_idx+1, len(dataloader), cost.item() ))#이터레이션은 3! (가중치와 편향의 업데이트 횟수 per 1에포크)

<Reference>

https://deeplearningzerotoall.github.io/season2/lec_pytorch.html

'📚STUDY > 🔥Pytorch ML&DL' 카테고리의 다른 글

06. softmax classification (0) 2020.02.28 05. Logistic Regression (0) 2020.02.28 04-1. Multivariable Linear regression (0) 2020.02.24 03. Deeper Look at Gradient Descent (0) 2020.02.24 02. Linear Regression (0) 2020.02.21 댓글

💾

AtoZ; 처음부터 끝까지 기록하려고 노력합니다✍